Delta robot

The delta robot is one of very well-known robot manipulator, it was designed mostly to use for a quick pick-and-place task in manufacturing process. We brought this advantage from this robot to solve the real world problem, so the application that we are using the delta robot for is “Chestnut picking”. Of course, the application is not limited as only chestnut, but we are thinking that this kind of robot and rover are able to do much more things depend on which object do you want to grab. We just need to train the neural network of the object detection for the new object. This is one of the challenges for us that we are trying to combine rover, robot manipulator and AI technologies together to help human works.

Robot Specifications

- ROBOTIS Dynamixel servos XM540-W270-R for three joints

- payload without gripper 400grams, (gripper is around 300 grams)

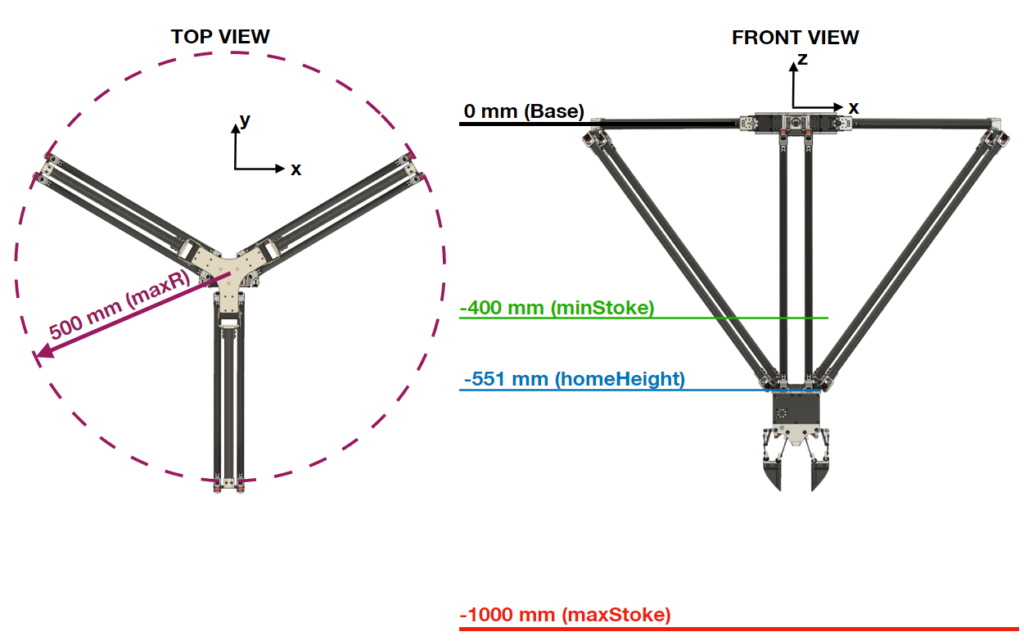

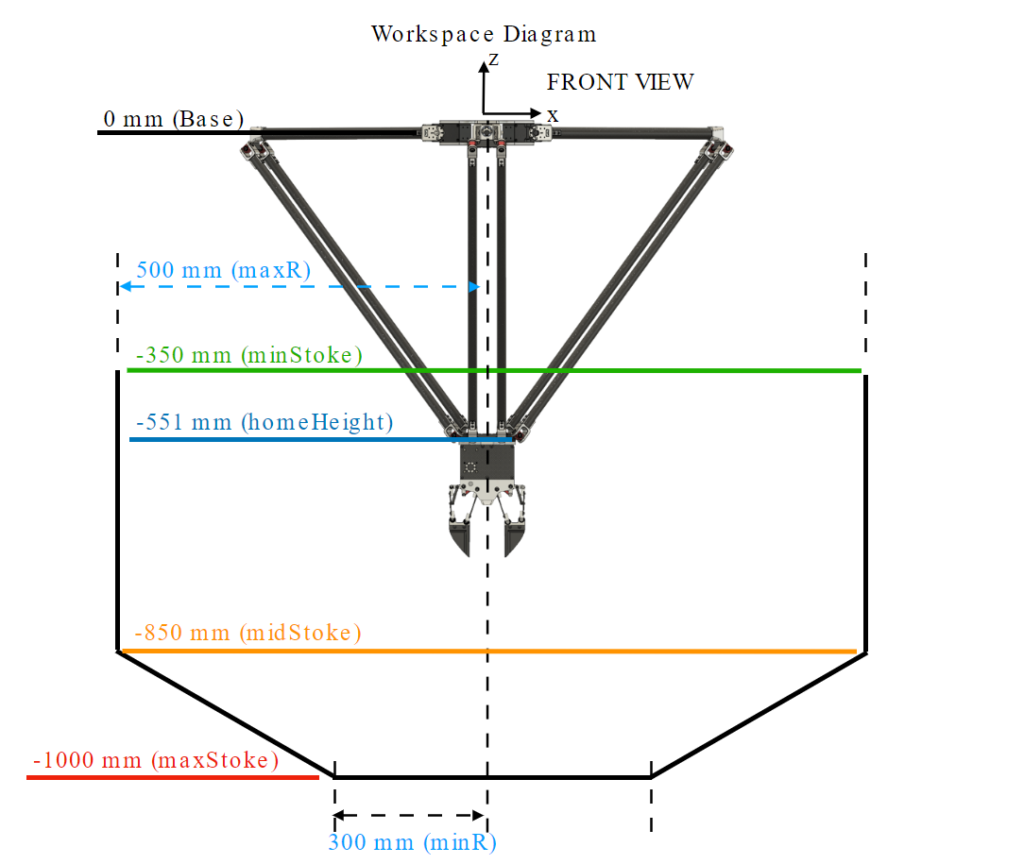

- working range in XY plane is 500mm of radius’s circle

- stoke range in Z axis is 700mm

- robot’s weight is around 2kg

This configuration is pretty much enough for light weight pay load object like chestnut, PET bottle, or some plants. We can try change the gripper to something else like nozzle spray or cutting blade, it depends on what you want to use it for.

The Dynamixel servo is a good option for a prototype like this, there is also an SDK provided by ROBOTIS which can make the development much easier. You can try check more detail on their site.

Algorithms

Let say there is no movement on the rover at first and focus only the delta robot. There are two main parts now that we will talk about. First, the chestnut-picker script and second, the chestnut-detection script. The arm will always start at the home position which shown on the right above image. There is a webcam attach at the gripper and running the chestnut-detection all of the time in this robot pose. When there is a chestnut exist on the camera, the chestnut center point in pixel coordinate will be converted to world coordinate of the robot and send to the chestnut-picker script to let the robot move. When the robot receive data, the detection part will pause, and let the robot grab the detected chestnut and done the job before continue doing the detection again, at robot home position. Because of this routine, the pixel coordinate and world coordinate can be linearly mapped. When there are many chestnut appear on the frame, all of the nuts position will be stored and the chestnut-picker script will do the picking task for all of the nut before come back to the detection process again. As you can see that there is a camera offset from the center of robot to the positive Y-axis. So we need to add this offset to the world coordinate of the detected chestnut as well to make the robot grab correctly.

Now we will bring the rover in the game too. But again we can still simply make the rover stops when chestnut is found out. But as you can imagine that the machine is not perfectly stop as our desire. So there will be some delay of the wheel and that will cause the chestnut coordinate shifted. So there is a rover offset that we need to take it into account. This rover offset comes from trial-error, so for the demonstration it will move just in constant speed. But in the future, I will need to bring the rover speed data from flight controller and calculate that offset as well.

Please check on my sample code of chestnut-picker and chestnut-detection on my GitHub if you’re interested .

For the CAD file of Delta Robot, here.